Quality of scientific literature: A report from the 2017 Peer Review Congress

2017 Peer Review Congress

The Editage team was one of the exhibitors at the Eighth International Congress on Peer Review and Scientific Publication held in Chicago from September 9 to September 12, 2017. For those who were not able to attend the Congress, our team presents timely reports from the conference sessions to help you remain on top of the peer review related discussions by the most prominent academics.

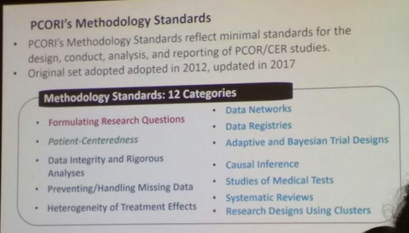

After some interesting sessions on the quality of reporting, it was time to discuss the quality of scientific literature. The session started off with an excellent presentation from Dr. Harold Sox from the Patient Centred Outcomes Research Institute (PCORI), whose group has assessed scientific quality in a series of comparative effectiveness research (CER) studies. A series of CER studies funded by PCORI during its first funding cycle (2013) were analyzed. Original applications were used to study PI-related variables potentially associated with quality and adherence to PCORI’s methodological criteria established in 2012 and updated recently. Twenty more reports will be reported after completion of peer review. The study hopes to provide measures of specific methodological shortcomings after following up on 300 studies through July 2019.

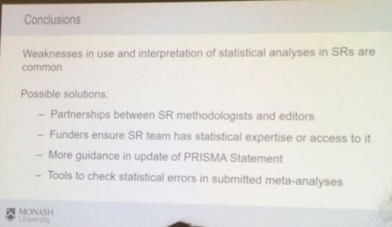

The next presentation by Matthew J. Page focused on the drawbacks of using statistical methods in systematic reviews of therapeutic studies. His group analyzed the interpretation of the statistical methods for a broad set of 32 Cochrane and 78 non-Cochrane studies. The choice of statistical model used was not clinically justified for over 70% of the studies. Moreover, pooled results were not interpreted as an average effect and prediction intervals were not reported for many studies. In many cases, overlapping confidence intervals were not explained and reasons for asymmetry in funnel plots were not justified. The conclusions of the study clearly highlighted the need for use of better statistical analysis methods. These conclusions raised many questions about whether guidelines in the Cochrane handbook were clear, whether they were being understood and followed. During the Q&A, Leslie Citrome, Editor-in-Chief of The International Journal of Clinical Practice, shared that his decision to reject a manuscript or send it for peer review is based on the study design and quality of data. However, he depends on the peer reviewers to vet the statistical methods used in the systematic review. He said a tool to analyze the statistical methods would make his life much easier as a journal editor.

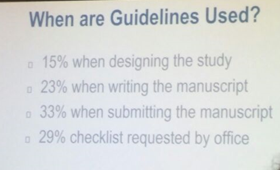

Next up was Marc Dewey, an associate editor at Radiology, who spoke about reproducibility issues in imaging journals such as Radiology. He went on to share the results of a survey of authors and reviewers about the reporting guidelines and checklists for contributors to Radiology for studies submitted between 2016 and 2017. The results of his survey revealed that most authors used these guidelines when writing the manuscript. Almost 80% of authors and 50% of reviewers found the guideline checklists to be useful. However, discussions after Marc’s presentation indicated that there is a lack of awareness about CONSORT and EQUATOR guidelines for clinical trials and many did not cite these guidelines in their manuscripts. Many of these guidelines are to be used by scientists at the time they design their study, but were being referred to only at the manuscript preparation phase.

Jeannine Botos from JNCI-Oxford University Press then took the podium to discuss the use of standard reporting guidelines (SRGs) among JNCI authors, and how it related to editorial outcomes and reviewer ratings. JNCI rejects more than 75% of submitted manuscripts before peer review. Although SRGs were not associated with editorial outcomes or reviewer ratings, reviewer ratings for adherence to guidelines and clarity of presentation were associated with editorial decision after peer review.

Emily Senna from the University of Edinburgh discussed the effect that intervention had on improving compliance with ARRIVE guidelines for reporting of In Vivo animal research. ARRIVE guidelines were published in 2010 and endorsed by all major UK funders and over 1000 journals. However, endorsing did not mean that these journals were enforcing these guidelines. In fact, results of this study opened the door for alteration in editorial policies to include an ARRIVE checklist that would be checked when a manuscript was being submitted.

All presentations in this session highlighted the poor quality of studies, especially systematic reviews for clinical trials. Developing multiple different guidelines will only confuse authors more and will not lead to better adherence to guidelines. Should they even be called ‘guidelines’? Why not just call them rules and enforce them at the time of manuscript submission? Some journals probably don’t have the bandwidth to check if the guidelines were being followed. They also cannot transfer the burden onto peer reviewers, who are already underappreciated and not compensated for their efforts. Is there a solution? Most solutions only seem to lead to a different set of problems.

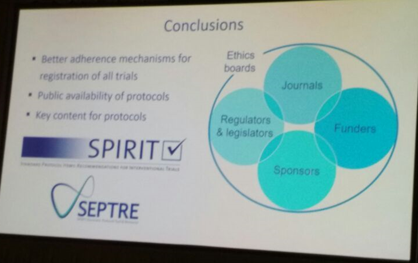

The next session on Trial Registration started with An-Wen Chan from the University of Toronto who compared protocols, registries, and published articles to understand the association between trial registration and reporting of trials. In addition to being unregistered and unpublished, clinical studies are often discrepant in the reporting of primary outcomes. Journal editors, legislators, funders, regulators, and ethics committees must mandate trial registration and provide access to all protocols to the public. By increasing transparency, biased reporting of trial results can be curbed.

Constance Zou from the Yale School of Medicine discussed the Impact of the FDA Amendments Act (FDAAA) on registration, reporting of results, and publication. Her study concluded that the FDAAA mitigated selective publication and reporting of clinical trial results and improved the availability of evidence for physicians and patients to make informed decisions regarding care for neuropsychiatric illnesses.

Rebecca J. Williams from ClinicalTrials.gov then shared their evaluation of results in ClinicalTrials.gov and its relationship to peer reviewed literature. They concluded that for completed or terminated trials registered on ClinicalTrials.gov, between 33% and 57% had reported data but not been cited in any PubMed articles. This clearly suggested that ClinicalTrials.gov was a unique source of results for many trials.

Day 2 ended with the feeling that trial registrations and the way they are done must be made better. Transparency and public access will hopefully lead to cleaner scientific literature in the future.

We'll bring you the reports from Day 3 soon. Stay tuned!

Related reading:

- Biases in scientific publication: A report from the 2017 Peer Review Congress

- Research integrity and misconduct in science: A report from the 2017 Peer Review Congress

- Quality of reporting in scientific publications: A report from the 2017 Peer Review Congress

- Exploring funding and grant reviews: A report from the 2017 Peer Review Congress

- Editorial and peer-review process innovations: A report from the 2017 Peer Review Congress

- Post-publication issues and peer review: A report from the 2017 Peer Review Congress

- Pre-publication and post-publication issues: A report from the 2017 Peer Review Congress

- A report of posters exhibited at the 2017 Peer Review Congress

- 13 Takeaways from the 2017 Peer Review Congress in Chicago

Download the full report of the Peer Review Congress

This post summarizes some of the sessions presented at the Peer Review Congress. For a comprehensive overview, download the full report of the event below.

An overview of the Eigth International Congress on Peer Review and Scientific Publication and International Peer Review Week 2017_7_0.pdf

Published on: Sep 13, 2017

Comments

You're looking to give wings to your academic career and publication journey. We like that!

Why don't we give you complete access! Create a free account and get unlimited access to all resources & a vibrant researcher community.