Quality of reporting in scientific publications: A report from the 2017 Peer Review Congress

2017 Peer Review Congress

The Editage team was one of the exhibitors at the Eighth International Congress on Peer Review and Scientific Publication held in Chicago from September 9 to September 12, 2017. For those who were not able to attend the Congress, our team presents timely reports from the conference sessions to help you remain on top of the peer review related discussions by the most prominent academics.

After the keynote address by David Moher from Ottawa Hospital Research Institute, Day 2 of the Eighth International Congress on Peer Review and Scientific Publishing changed gears with sessions on the quality of reporting.

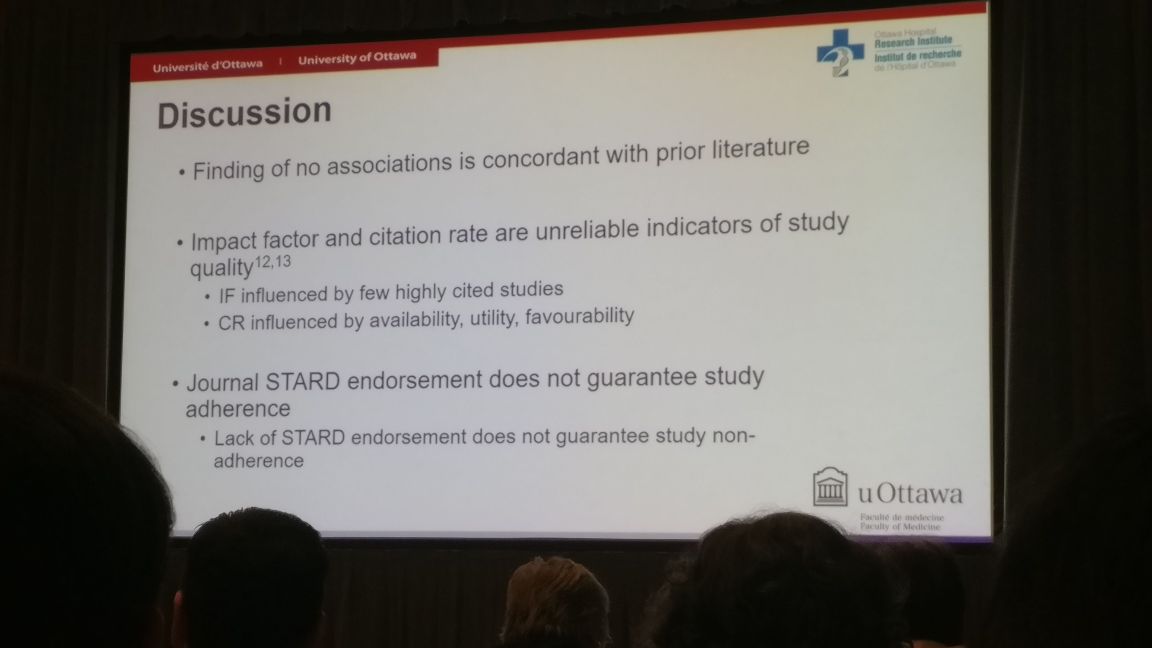

Robert Frank from the University of Ottawa presented his group’s study that analyzed the variables associated with false results in imaging research. Meta analyses (MAs) are the closest proxy to the truth in imaging studies. Study-level (citation rate, publication order, and publication timing relative to STARD)and journal-level variables(Impact factor, STARD endorsement, and Cited half-life) were studied for their association with the distance between primary results and summary estimates from MAs. After analyzing 98 MAs containing 1458 primary studies, the study concluded that the distance between primary results and summary estimates from MAs was not smaller for studies published in high impact factor (IF) journals compared to lower IF journals. Their conclusions re-emphasized that higher IFs and citation rates are not indicative of better study quality.

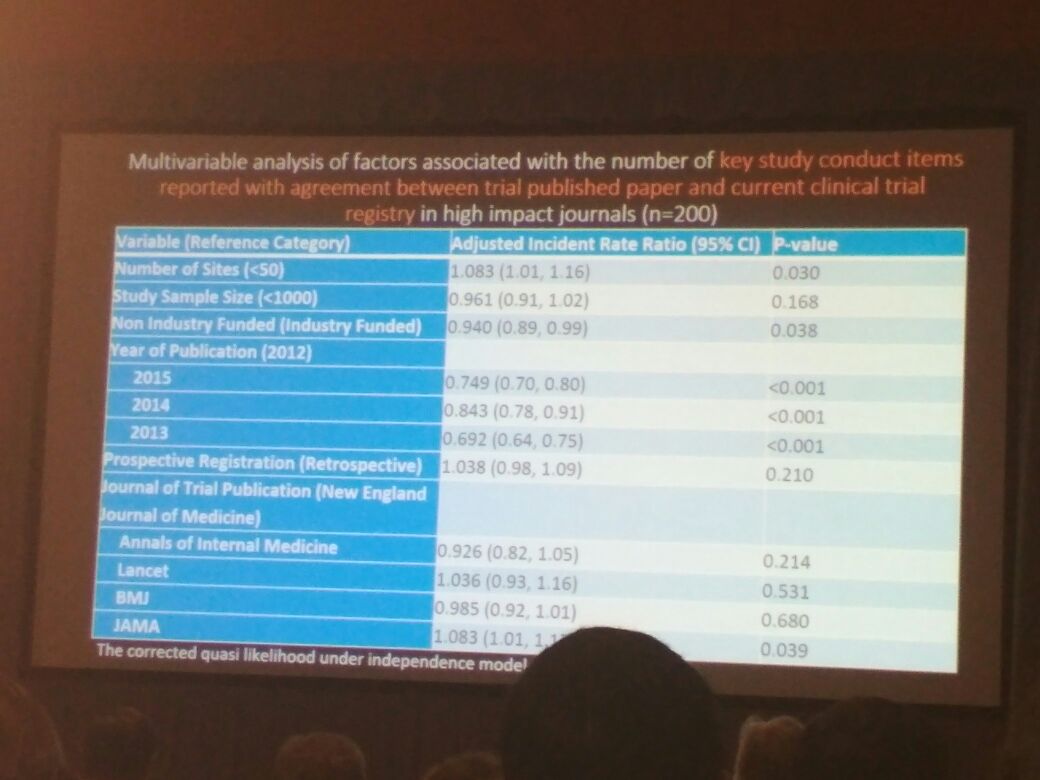

Next up was Sarah Daisy Kosa from McMaster University and Toronto General Hospital Health Network. Her study discussed discrepancies between reported trial data and those updated in clinical trial registries for studies published in high-IF journals. Her research group searched PubMed for all randomized clinical trials (RCTs) published in the top 5 (as per the 2014 IF published by Thomson Reuters) medicine journals between 2012 and 2015. Of the 200 RCTs they studied, discrepancies were observed between publications and clinical trial registries. Their study clearly highlighted the need for uniform reporting of clinical data in publications and registries in order to preserve the quality and integrity of scientific research.

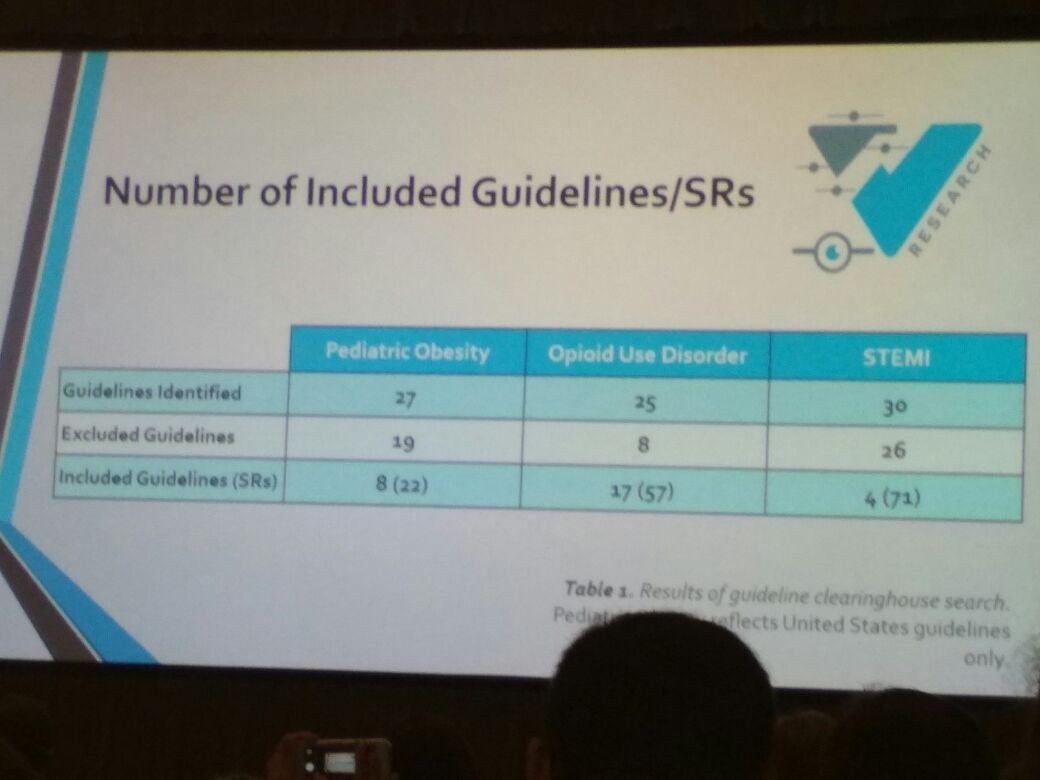

Cole Wayant from Oklahoma State University Center for Health Sciences then took the stage to discuss the quality of methods and reporting of systematic reviews (SRs) underpinning clinical practice guidelines. His study analysed MAs and SRs based on PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-analyses) and AMSTAR (A Measurement tool to assess Systematic Reviews) guidelines. His study noted that many items on these checklists were missing for MAs and SRs, reemphasizing the need for higher quality SRs, especially because they are considered level 1A evidence and can impair clinical decisions, thereby hindering evidence-based medicine.

The final and perhaps the most engaging presentation of the session was delivered by Kaveh Zakeri, a medical resident from the University of California, San Diego. His study analyzed the existence of optimism bias in contemporary National Clinical Trials Network Phase 3 trials. Zakeri’s group identified 185 published studies from PubMed that were phase III randomized co-operative group clinical trials between January 2007 and January 2017. By comparing the proposed and observed effect sizes, they calculated proposed-to-observed hazard ratios (HRs) that were indicative of therapy benefit and reduction in adverse effects. Their results helped them conclude that most NCI-sponsored Phase 3 trials could not demonstrate statistically significant benefits of new therapies. They suggested that although the magnitude of optimism bias had reduced compared to studies between 1955 and 2006, better rationales for proposed effect sizes were required for clinical trial protocols. Many trials in highly specialized fields cannot be repeated and even though the sample size can be small owing to an inability to recruit enough patients for a trial, most phase II and phase III trials provided no rationale at all for the proposed effect size.

We'll cover the sessions of the second half in the next part of the report. The post-lunch sessions will discuss scientific literature, trial registration, and funding/grant review.

Related reading:

- Biases in scientific publication: A report from the 2017 Peer Review Congress

- Research integrity and misconduct in science: A report from the 2017 Peer Review Congress

- Exploring funding and grant reviews: A report from the 2017 Peer Review Congress

- Quality of scientific literature: A report from the 2017 Peer Review Congress

- Editorial and peer-review process innovations: A report from the 2017 Peer Review Congress

- Post-publication issues and peer review: A report from the 2017 Peer Review Congress

- Pre-publication and post-publication issues: A report from the 2017 Peer Review Congress

- A report of posters exhibited at the 2017 Peer Review Congress

- 13 Takeaways from the 2017 Peer Review Congress in Chicago

Download the full report of the Peer Review Congress

This post summarizes some of the sessions presented at the Peer Review Congress. For a comprehensive overview, download the full report of the event below.

An overview of the Eigth International Congress on Peer Review and Scientific Publication and International Peer Review Week 2017_4_0.pdf

Published on: Sep 12, 2017

Comments

You're looking to give wings to your academic career and publication journey. We like that!

Why don't we give you complete access! Create a free account and get unlimited access to all resources & a vibrant researcher community.