Misplaced trust? The problems in science publication

The October 19th issue of The Economist spotlighted an article that hits very close to home for me as an editor in Molecular Biology and Genetics. It seems that science is failing itself these days, with several issues eating away at the foundation of trust built over the last few decades and impeding our search for solutions to various challenges and a greater understanding of the world. As the number of scientists conducting research has reached unforeseen heights, it appears that the quality of conducted and published research has reached new lows. The lapses in maintaining science’s solid foundation are varied and interwoven. Errors arise in study design, understanding significance, reviewing and publication, and the culture of competition that now defines the scientific field.

The “minimal-threshold” journal PLoS One rejects papers for publication only when there are procedural gaps in the study design. Even at this basic level, close to half the submissions are rejected. The situation gets graver as the amount of data available to researchers increases. In subatomic physics, investigators believed they had found quarks appearing in groups of 5, when they are usually found only in groups of 2 or 3. Scrutiny of the study design revealed that data analysis in the study was not properly blinded. The aberrant quarks were no longer observed once this oversight was corrected. Similarly, results published in 2010 that linked gene variants with increased lifespan had to be retracted the next year after the realization that specimens acquired from centenarians were treated differently from those acquired from younger participants.

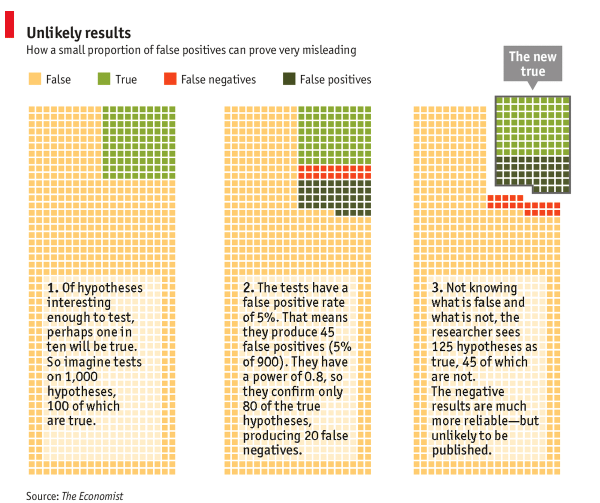

Most modern scientists hypothesize, study, and aim to publish new and surprising positive results. However, in a counterintuitive twist, it is the negative results obtained from these suppositions that are more accurate. Statistically speaking, studies with a power of 0.8—that is, studies that correctly return 8 out of 10 positive results and 2 false negatives—provide the most significant results. At the same time, 5% of the incorrect results obtained are false positives. Adapting these numbers to 1,000 tested hypotheses, we get 80 correctly positive results, with an additional 45 false positives. What scientists then report are what they see as all the positive results—125 positives—at an accuracy of only 64%. On the other hand, out of the initial 1,000 tested hypotheses, there are 875 negative results, including 20 false negatives. The accuracy of this set of data is close to 98%. Clearly, findings disproving a hypothesis are more reliable than those proving it to be true, but only a dwindling minority  of published works describes such outcomes. Reporting negative results also has the added benefit of preventing a waste of resources in future experiments or clinical trials that probe the same concepts. In a further statistical complication, while scientists understand that statistical significance plays a major role in determining how important an experiment’s results are, they do not perceive the subtleties of the equations they use to report their findings and often choose to employ formulae they are most comfortable with or that are included in software.

of published works describes such outcomes. Reporting negative results also has the added benefit of preventing a waste of resources in future experiments or clinical trials that probe the same concepts. In a further statistical complication, while scientists understand that statistical significance plays a major role in determining how important an experiment’s results are, they do not perceive the subtleties of the equations they use to report their findings and often choose to employ formulae they are most comfortable with or that are included in software.

The publication process, which includes the highly regarded peer review system that science is so proud of, is also falling short of expectations. Publishers overwhelmingly favor the publication of something previously unreported and the error-prone positive results mentioned above. Reviewers themselves are often unable to spot major mistakes in the manuscripts they are checking, as demonstrated by incognito studies of the reviewing process. In one study, just over 50% of the journals to which the author submitted their obviously faulty research paper deemed the findings publishable. In another assessment that focused on the respected British Medical Journal, reviewers generally found 2 or fewer out of 8 deliberately included errors, while some did not find any at all.

Finally, the competitive culture now engrained within the scientific community is impeding our progress more than anything else. In a “publish-or-perish” environment, careers depend on publishing a greater quantity of results, whether they are reliable or not. Data from surveys taken over 20 years have revealed that 2% of participants admitted to falsifying data themselves in order to publish their papers, while 28% admitted knowing coworkers whose methods were debatable. Competition is also making the scientific community less open to sharing data and methods, which, in turn, hampers the few attempts made at reproducing new experiments. One of the hallmarks of scientific research, and the main reason for its reliability, is that the same results will always be obtained from the same experiments—the idea of reproducibility. Unfortunately, much of the research published these days is not reproducible. Attempts to recreate some supposedly landmark experiments were successful in a scarce 6 out of 53 tests performed by Amgen and a quarter of 67 by Bayer. Even more disturbing is the fact that, between 2000 and 2010, close to 80,000 patients took part in clinical trials based on findings that were later retracted because of mistakes and misconduct. While scientists accept that it is impossible to be flawless, they are hesitant to correct their mistakes and withdraw erroneous results.

Several solutions have been proposed to begin restoring confidence in science, such as having journals screen for errors more strictly and rewarding investigators for quality of research rather than quantity, but it will be much harder to implement such suggestions in practice. The amount of inaccuracy science accepts nowadays is alarming, and we can only hope that the scientific community resolves its woes before the damage to its credibility is irreversible.

For another perspective on the emphasis on novel findings, see here.

Published on: Jan 23, 2014

Comments

You're looking to give wings to your academic career and publication journey. We like that!

Why don't we give you complete access! Create a free account and get unlimited access to all resources & a vibrant researcher community.